FaceApp and similar reality-warping applications are especially fun to use in ways their designers never intended. Along similar lines, Google’s DeepDream (designed for photo manipulation) creates fascinating results using photographs but is even more stunning when applied to representations of cityscapes.

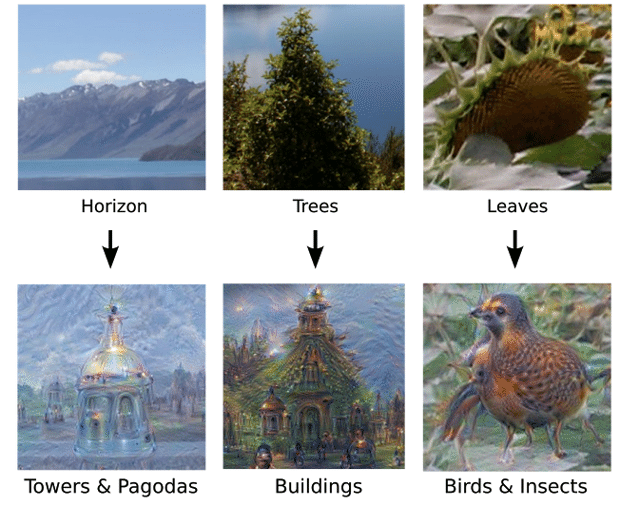

While training DeepDream (a neural network that adapts like a brain to new inputs) to identify, differentiate and understand images, Google researchers discovered it could “over-interpret” results as well. In short: it could start to “read into” images from previous experience, resulting in an array of beautiful (if disturbing) hybrids.

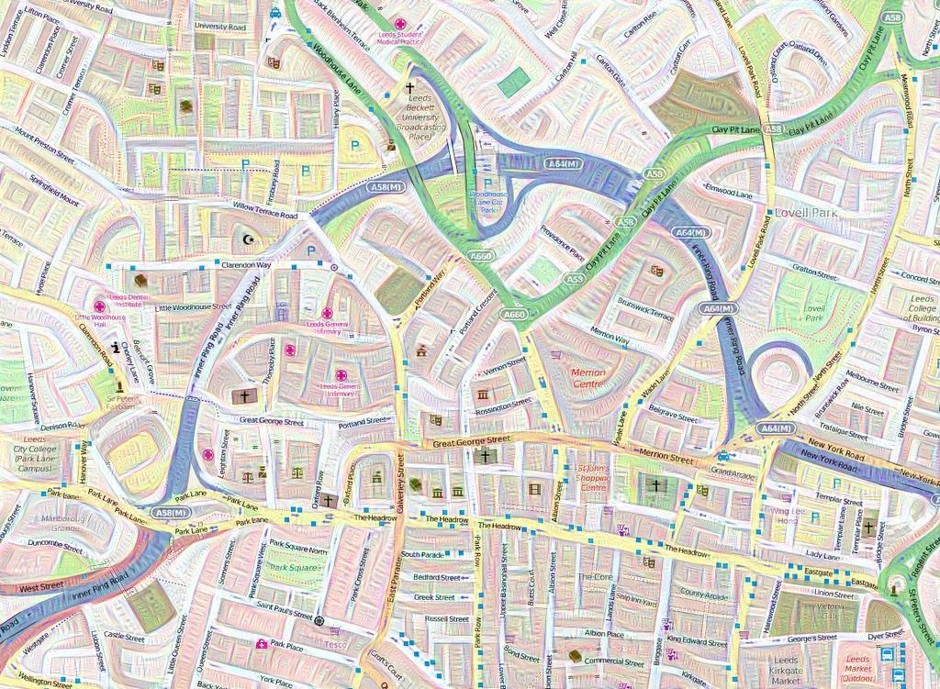

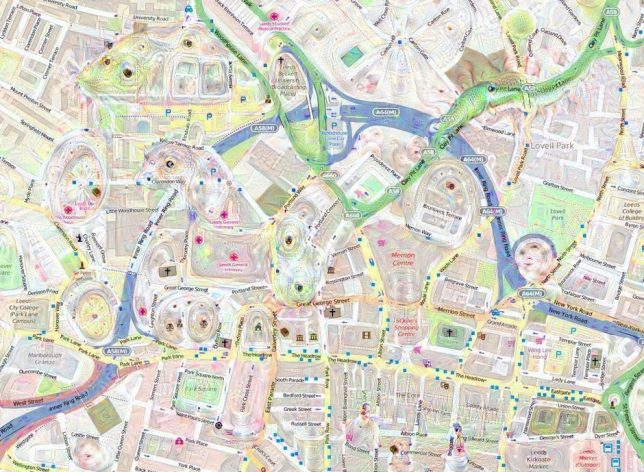

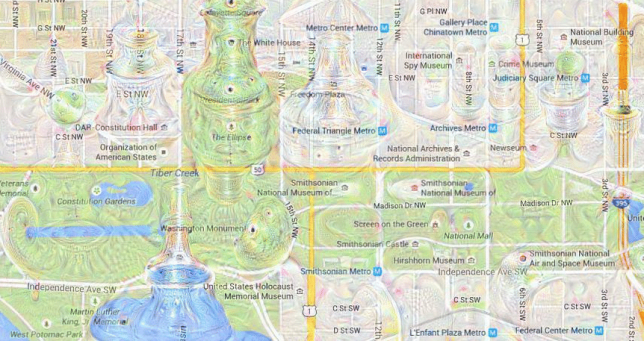

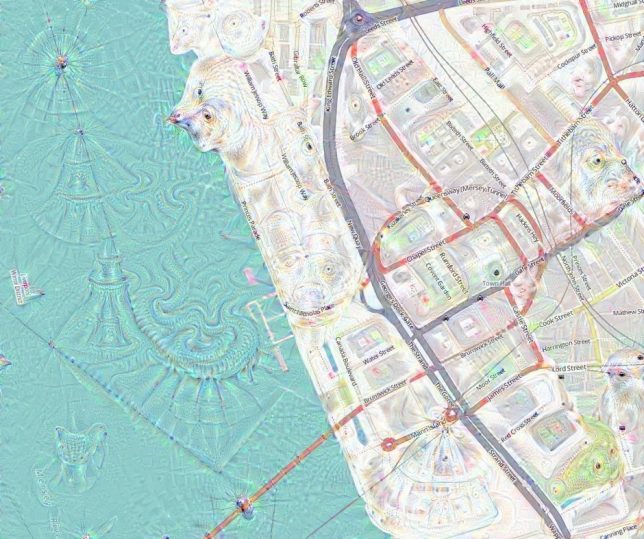

Once it went public, mapmakers were among those intrigued by the possibilities of geo-visualization, turning flat maps into seemingly living landscapes. Tim Waters, a geospatial developer, began taking OpenStreetMap data and running it through the system, generating these strangely psychedelic urban environments.

He discovered that a short run could create fractal and quilting effects, while longer and reiterated processing started to introduce faces and creatures to the mix.

Above: monkeys and frogs seem to emerge from the grid, while a coastal region forms the head of a bear, making the landscape look like a giant bearskin rug. Overall, the effects are quite beautiful, creating a sense of depth and adding character to what would otherwise be fairly generic representations.