The question of who owns your face sounds absurd on the surface – of course you own it, it’s attached to your body after all. But in an era of facial recognition technology, in which your face can be scanned and added to databases without your knowledge or consent, the answer to that question gets a lot more complicated. Your unique composition of features might already be included in a collection used by data brokers, the government, police and advertising and tech companies to tag you in photos, match you to alleged criminal activity, sell information about you or teach neural networks how to refine facial recognition technology itself.

In fact, we have the internet and its databank of faces to thank for reaching this point. Millions upon millions of faces are now available for the scraping, which would have been difficult or impossible to achieve any other way. Computer scientists feed these images to artificial intelligence to “train” them how to recognize faces, and advances in graphics processing allow the machines to sort through them at a whiplash pace. Very little human input is needed as the neural networks use their own algorithms to decide which similarities and differences between the faces are significant, making the whole thing a bit of a mystery.

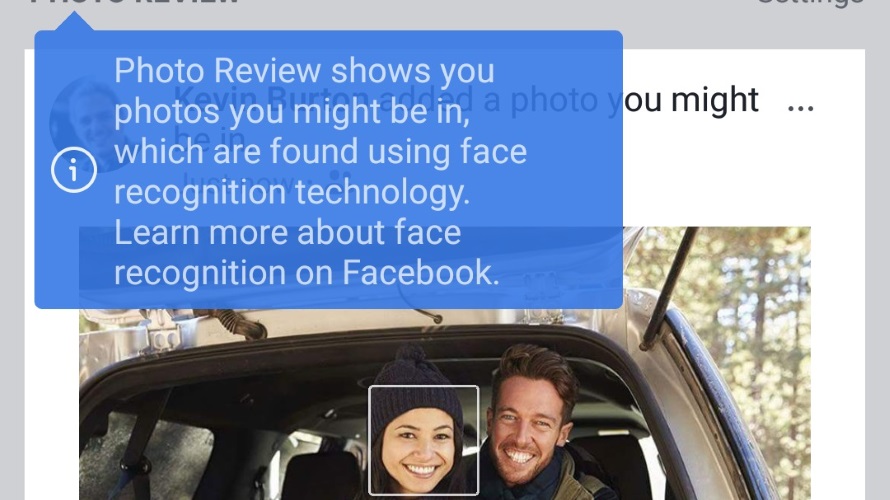

Many of us willingly make our faces available simply by using social media, lured in by apps like Snapchat and Instagram that map our features in order to apply cute filters and perform other tricks, or just by having photos of ourselves appear on the web. The convenience of features like Apple’s Face ID, which uses facial recognition to log in and pay for transactions, can also be hard to resist. But if you think avoiding the latest iPhones and apps will keep you out of these databases, you’re fooling yourself.

Surreptitious Scanning

At least 40 million surveillance cameras shoot billions of hours of footage a week in the United States alone, according to 2014 estimates. Many cities hope to fight crime by installing them in parks, intersections and sidewalks, often working in conjunction with the ones that peer out from private properties, and some are police-operated. Cities like New York, Washington, Chicago, Orlando and Los Angeles aren’t even required by law to reveal the extent of surveillance or how it’s used, and there are no enforceable rules to limit invasions of privacy or protect against abuse of surveillance systems.

Some of the cameras are equipped with real-time facial recognition technology, which can even identify moving targets. Plus, airports around the world are beginning to integrate facial recognition technology into the check-in process as well as their general surveillance systems, so you could be recognized before you even walk through the doors. You might even be scanned while attending a Taylor Swift concert as the singer’s security team searches the crowd for stalkers.

All of this means our faces can easily be recorded without our knowledge and used for remote surveillance, checked against databases of driver’s license photos and mugshots as well as photographs taken from the internet that can be tied to social media accounts, website profiles or other identifying information. Some people may learn this and think to themselves, “well, I’m not doing anything wrong, so it doesn’t affect me.” Unfortunately, that’s not necessarily true.

The Problem of False Positives

Amazon is one of the primary tech giants playing with facial recognition technology in disturbing ways, including pitching its Rekognition software to officials from Immigration and Customs Enforcement (ICE) and filing a patent that would use doorbell cameras to document and identify people considered to be “suspicious.” How, exactly, they determine who belongs in this database is unclear, but it looks like it would at least partially be user-submitted. Let’s say you move into a new home and knock on a neighbor’s door to introduce yourself. If they have a doorbell camera, they can record your face, realize they don’t know you and sort you into the “suspicious” file, which might be shared with the entire neighborhood and Amazon at large. But algorithms also play a role, and like humans, they’re often wrong.

First of all, complete strangers can look like twins, or at least similar enough for AI to flag one in place of another. But even more troubling is the fact that facial recognition algorithms struggle to distinguish faces with dark skin. In fact, in a test by the ACLU, Amazon’s Rekognition program falsely matched 28 members of Congress with mugshots, including a disproportionate number of members of the Congressional Black Caucus. A recent MIT study also found that AI had much more trouble identifying black women than white men. It’s not hard to imagine what would happen if the software is integrated into police body cams, giving officers false “evidence” that the people before them are criminals. By the way, Rekognition is currently publicly available and wildly cheap; the ACLU test cost them just $12.33.

Your Face Can Be Hacked

When Apple debuted its Face ID for the iPhone X, the company claimed that the chance a random person could unlock your protected device is just one in a million (compared to one in 50,000 for its previous fingerprint-based Touch ID technology.) The feature projects over 30,000 invisible dots on your face to create a detailed facial map and stores that data in a “Secure Enclave” within the phone. Apple also said there’s no printed photo, video of a face or high end mask that could possibly beat the system. It was wrong. Vietnamese security firm Bkaev cracked Face ID with composite masks made of 3D-printed plastic, silicone, makeup and paper cutouts. Meanwhile, Forbes managed to break into four different Android phones using a 3D-printed head.

Far beyond simply gaining access to your devices, hackers can steal your entire face and use it for all sorts of nefarious purposes, and not just by producing a 3D-printed mask of your countenance and wearing it while committing crimes. It’s astonishingly easy to use readily available software to impersonate other people, creating convincing videos that aren’t quite what they seem. The implications of fakes like the Barack Obama video above are clear, and as the technology is refined, they could grow increasingly difficult to spot.

Maybe these “deep fakes” are funny when it’s just Nicolas Cage grafted onto the bodies of other actors in dozens of movies, but ordinary women whose faces are stolen and and used to create deceptively real-looking porn videos don’t find it so humorous. Such videos can be used to humiliate, defame and target women for abuse, whether for the creator’s own pleasure or as a form of revenge porn. So far, victims have no legal recourse; experts say deepfakes are too untraceable to investigate and exist in a legal grey area, meaning they could be protected as free speech.

There’s No Going Back Now

Facial recognition technology isn’t conceptual, theoretical or far off into the future. It’s here to stay. In China, it has already become deeply rooted in government schemes to control the populace. Two hundred million public surveillance cameras aim for an omnipresent, fully networked and fully controllable nationwide system of facial recognition working in conjunction with cell phone signals and digital financial transactions by 2020 to track citizens’ every move. By the time it’s implemented, it will also include the nation’s mandatory “social credit” ratings, which use information like how people spend their time, what they buy and the company they keep to determine whether they’re worthy of jobs, housing, travel or simply free movement. Other countries will surely follow their example, and many are well on their way.

Even people involved in creating facial recognition technology are sounding the alarms. Brian Brackeen, CEO of the firm Kairos, wants tech firms to join together to keep the technology out of the hands of law enforcement. “Time is winding down but it’s not too late for someone to take a stand and keep this from happening,” he says.

In December 2018, Microsoft urged Congress to write laws for its own facial recognition software in the year ahead, profiting from the controversial technology even as it advocates for regulation.

“We have turned down deals because we worry that the technology would be used in ways that would actually put people’s rights at risk,” said Microsoft president Brad Smith in a speech at the Brookings Institution.

As facial recognition technology continues to evolve, so will public perception around it. Have your feelings changed after learning about how it can be used?

If you’re feeling nervous already and wish you could just be invisible, check out how these 15 anti-surveillance gadgets and wearables.