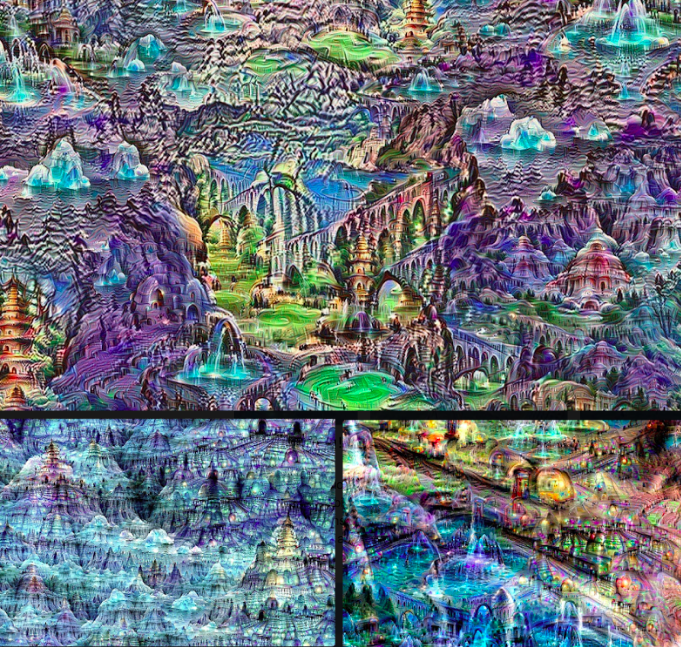

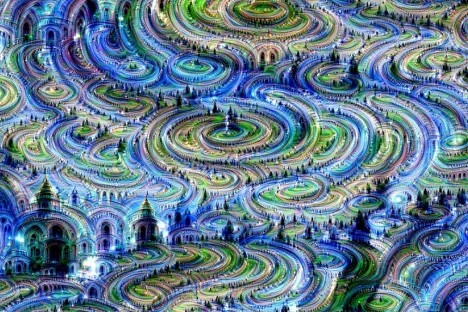

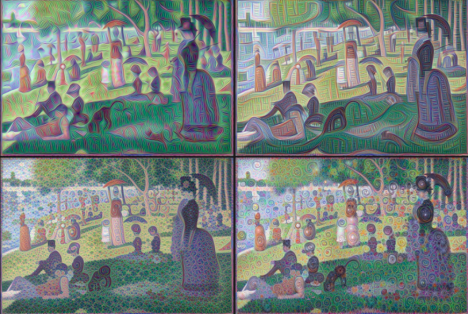

Those of us working in creative fields have often consoled ourselves that although automation may claim many other jobs, at least robots can’t make art. That’s not exactly true for a variety of reasons (depending on how you define ‘art’), but it really goes out the window when you look at these astonishing images released recently by Google. The landscapes produced on the company’s image recognition neural network reveal the answer to the question, “Can artificial intelligence dream?”

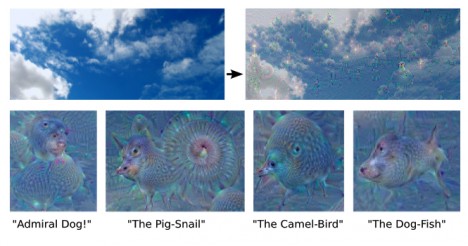

It turns out that it can – sort of. Google created a method to ‘teach’ its neural network to identify features like animals, buildings and objects in photographs. The computer highlights the features it recognizes. When that modified image is fed back to the network again and again, it’s repeatedly altered until it produces bizarre mashups that belong in a gallery of surreal art.

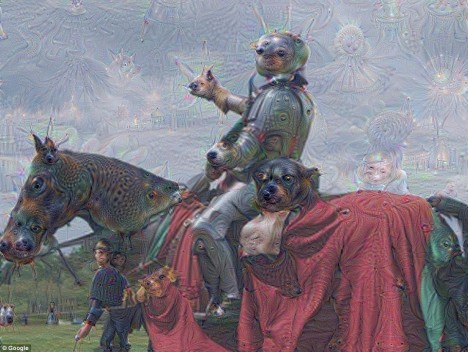

Suddenly, an image of a knight on a horse is filled with ghostly impressions of frogs, fish, dogs and flowers. The knight’s arm seems to have sprouted a koala head, while the head of another unrecognizable animal emerges from beneath the horse’s tail. In another image, a tree and field turn cotton candy pink, and the clouds transform into conjoined sheep monsters.

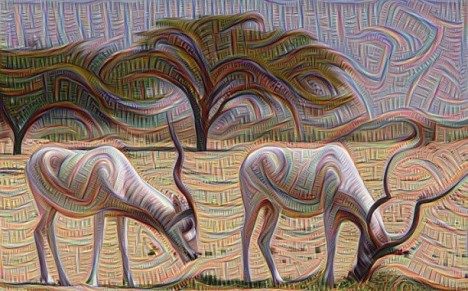

Technically, it’s more like the computers were fed psychedelics and asked to paint, rather than capturing random images that might flash through their artificial ‘minds’ when they’re idle. The computers look for patterns and edges within the photos and paintings when they’re trying to identify objects and shapes, leading to those strange ghostly images scattered randomly throughout. Those edges are brought out more in each successive layer until the network starts thinking it sees all sorts of things within them.

Google describes it as “inceptionism,” saying “We know that after training, each layer progressively extracts higher and higher-level features of the image, until the final layer essentially makes a decision on what the image shows.”

“So here’s one surprise: neural networks that were trained to discriminate between different kinds of images have quite a bit of the information needed to generate images too. Why is this important? Well, we train networks by simply showing them many examples of what we want them to learn, hoping they extract the essence of the matter at hand (e.g., a fork needs a handle and 2-4 tines), and learn to ignore what doesn’t matter (a fork can be any shape, size, color or orientation).”

“If we choose higher-level layers, which identify more sophisticated features in images, complex features or even whole objects tend to emerge. Again, we just start with an existing image and give it to our neural net. We ask the network: “Whatever you see there, I want more of it!” This creates a feedback loop: if a cloud looks a little bit like a bird, the network will make it look more like a bird. This in turn will make the network recognize the bird even more strongly on the next pass and so forth, until a highly detailed bird appears, seemingly out of nowhere.”

Check out lots more examples on Google’s research blog.